Time series analysis has become an essential skill for software engineers and data scientists pursuing roles in machine learning (ML) at top-tier tech companies like FAANG, OpenAI, and Tesla. With the increasing importance of predictive analytics, anomaly detection, and forecasting, companies heavily rely on time series data to make informed decisions. This blog serves as a comprehensive guide to help you prepare for ML interviews, particularly focusing on time series analysis—a frequently tested topic.

In this guide, we will cover the basics of time series data, key concepts, common algorithms, real-world applications, frequently asked interview questions at FAANG and other leading companies, and practical tips to ace time series questions in interviews. By the end of this article, you’ll be equipped with the knowledge and preparation tools to tackle time series questions confidently.

Understanding Time Series Data

Time series data is distinct from other types of data because it is inherently sequential, with each data point being dependent on time. Time series analysis focuses on understanding and analyzing this sequence of data points, which are typically recorded at consistent intervals over time. What makes time series data unique is its temporal dependencies, which means that the order in which the data points occur matters significantly. Unlike random or independent data, past values in a time series can influence future values.

What Makes Time Series Data Unique?

At its core, time series data is fundamentally about time-based relationships. A few key features differentiate it from other types of data:

-

Sequential Nature: Each data point is dependent on the previous one. For instance, today’s stock price may depend on yesterday’s price.

-

Temporal Dependence: Time is a key variable. In contrast to datasets where observations are independent of each other, time series data points are ordered chronologically.

-

Autocorrelation: In time series, there’s often a correlation between current and past observations. This means that events closer in time are more likely to be related than those further apart.

Common Examples of Time Series Data

Understanding time series data becomes clearer with real-world examples:

-

Stock Market Prices: Historical prices of a stock over time, recorded at intervals (daily, weekly, etc.).

-

Weather Data: Temperature, humidity, and wind speed collected over time.

-

Server Logs: Time-stamped records of server activity, often used to detect performance issues or anomalies.

-

Website Traffic: The number of visitors to a website tracked hourly, daily, or weekly.

-

Sales Forecasting: Historical sales data collected at regular intervals, which helps predict future sales.

Why Time Series Matters in Machine Learning

For machine learning engineers, mastering time series data is crucial for several reasons. Many real-world applications depend on sequential data analysis, from stock price forecasting to anomaly detection in server performance logs. Top companies use time series analysis to drive predictive analytics in domains such as e-commerce (demand forecasting), finance (stock prediction), and tech (server uptime predictions).

Having a thorough understanding of time series data will allow candidates to address complex ML interview questions that test problem-solving, forecasting, and the ability to work with temporally dependent data. Moreover, knowing how to model time series data effectively is critical for improving the accuracy of machine learning models.

Key Concepts in Time Series Analysis

Time series analysis is built on a few fundamental concepts. Understanding these concepts is essential, as they often form the basis of interview questions. Let’s walk through some of the most critical terms you’ll encounter.

Stationarity

A time series is said to be stationary if its statistical properties (mean, variance, autocorrelation, etc.) remain constant over time. Non-stationary time series, where the mean or variance changes over time, are more challenging to model because they exhibit trends or seasonality. Many statistical models, such as ARIMA, assume that the time series is stationary, which is why transforming a non-stationary series into a stationary one (via differencing, detrending, or transformation) is a common preprocessing step.

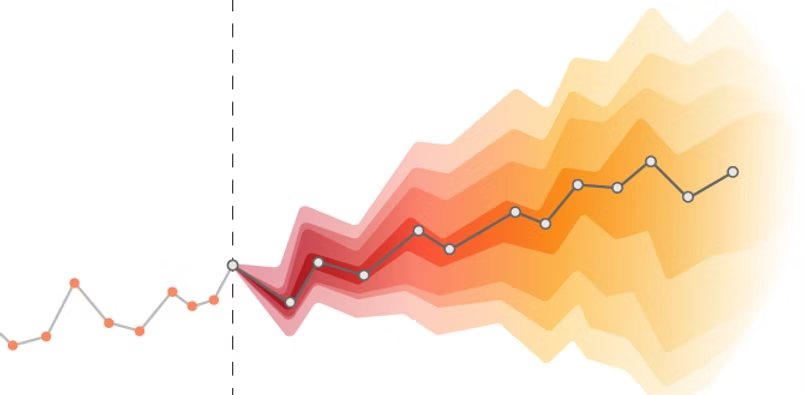

Trend

The trend represents a long-term movement in the time series data. If the data tends to increase or decrease over time, it shows a trend. Understanding whether a dataset has an upward, downward, or flat trend is crucial in determining how the model will make future predictions.

Seasonality

Seasonality refers to periodic fluctuations in a time series that occur at regular intervals due to repeating events, such as daily, weekly, monthly, or yearly patterns. For example, retail sales often spike during the holiday season, demonstrating clear seasonality. Identifying seasonal components in time series data is important for improving model accuracy, particularly for forecasting tasks.

Autocorrelation

Autocorrelation measures the relationship between a variable’s current value and its past values. In time series data, autocorrelation helps identify patterns and dependencies, such as whether an increase in a variable today is likely to lead to an increase tomorrow. Autocorrelation functions (ACF) and partial autocorrelation functions (PACF) are tools that help quantify these dependencies at different time lags.

Lag

Lag refers to the number of periods by which a variable is shifted. A lag of 1 means that today’s value is compared to yesterday’s value. Lag values are used to capture the autocorrelations between current and past observations. In machine learning models, particularly in time series forecasting, lagged variables are often used as features to improve predictions.

Time Series Decomposition

Time series decomposition is the process of breaking a time series down into its constituent parts—typically trend, seasonality, and residual components. This decomposition helps to better understand the structure of the data and can improve forecasting accuracy by treating each component separately. Additive decomposition assumes that the components are added together (e.g., data = trend + seasonality + residuals), while multiplicative decomposition assumes that they are multiplied (e.g., data = trend seasonality residuals).

Autoregression

Autoregression (AR) refers to a type of model where the current value of the time series is regressed on its previous values. The basic idea is that past data can be used to predict future data. The order of autoregression (AR) refers to the number of previous time steps used in the model.

Moving Average

Moving average (MA) models predict the future value of a time series by averaging past forecast errors. It smooths out short-term fluctuations and identifies longer-term trends. Moving averages are often used in conjunction with autoregressive models to form ARMA or ARIMA models.

Understanding these key concepts will provide you with a solid foundation for solving time series problems in machine learning interviews. Many interview questions focus on your ability to identify patterns (like seasonality or trends) and to transform non-stationary data into a format that can be analyzed with standard statistical models.

Common Algorithms and Models for Time Series Analysis

A variety of models are available for time series forecasting and analysis, and knowing when and how to apply them is critical for ML interviews. Let’s explore some of the most widely used models.

Statistical Models

ARIMA (AutoRegressive Integrated Moving Average)

ARIMA is one of the most popular models for time series forecasting. It combines three key components: autoregression (AR), differencing (I), and moving average (MA).

-

Autoregression (AR): A regression of the time series on its own lagged values.

-

Integrated (I): Differencing of the raw observations to make the time series stationary.

-

Moving Average (MA): Modeling the relationship between an observation and a residual error from a moving average model.

ARIMA is useful for datasets that are non-stationary but can be made stationary through differencing. The parameters (p, d, q) are used to specify the order of the AR, I, and MA components.

SARIMA (Seasonal ARIMA)

SARIMA extends ARIMA by adding components that capture seasonality. This model is suitable when the data exhibits periodic patterns (e.g., monthly sales data). SARIMA allows for the modeling of both seasonality and non-seasonal trends, making it a more flexible and powerful model for many time series forecasting tasks.

Exponential Smoothing

Exponential smoothing is a technique used to smooth out short-term fluctuations and highlight longer-term trends. Unlike moving averages, exponential smoothing assigns exponentially decreasing weights to past observations, meaning that recent data points are given more weight than older ones. This method is particularly useful when the time series data has a clear trend or seasonality.

Machine Learning Models

LSTM (Long Short-Term Memory Networks)

LSTM is a type of recurrent neural network (RNN) specifically designed to handle time series data with long-term dependencies. Unlike traditional RNNs, LSTMs can remember important information for long periods, making them ideal for time series forecasting tasks where distant past observations influence future predictions. LSTMs have been widely adopted for complex time series tasks such as stock price prediction and speech recognition.

Prophet (Facebook’s Forecasting Tool)

Prophet is an open-source forecasting tool developed by Facebook that is specifically designed for handling time series with strong seasonal components. Prophet is intuitive, easy to use, and handles missing data and outliers effectively. It works well for daily, weekly, or yearly data with clear seasonal patterns.

Random Forest for Time Series

Although Random Forest is a decision tree-based model typically used for classification and regression tasks, it can also be applied to time series problems. Random Forest can be adapted for time series forecasting by treating lagged observations as input features. This approach works well when the time series exhibits complex non-linear patterns that statistical models like ARIMA cannot capture.

Use Cases for Each Model

-

ARIMA: Effective for time series data without seasonality but with a strong trend, such as stock price prediction.

-

SARIMA: Ideal for time series with seasonal patterns, such as monthly sales forecasting.

-

LSTM: Useful for complex, non-linear time series problems with long-term dependencies, such as speech recognition or advanced financial forecasting.

-

Prophet: Best for time series with strong seasonal effects and missing data, such as web traffic forecasting.

-

Random Forest: Suitable for non-linear time series forecasting, especially when dealing with a high number of features or predictors.

Understanding these models and knowing when to apply each one will give you a strong edge in ML interviews. Make sure to practice implementing these models and interpreting their outputs, as interviewers may ask you to compare the pros and cons of different approaches or even code a simple model during a technical interview.

Real-World Applications of Time Series Analysis in ML

Time series analysis plays a pivotal role in a wide variety of real-world applications, especially in industries where predictions or anomaly detection are vital to business success. Below are a few examples of how time series analysis is applied in the real world, and why it’s an essential skill for machine learning engineers.

Stock Market Prediction

Predicting stock prices using historical market data is one of the most well-known applications of time series analysis. By analyzing trends and patterns over time, machine learning models can help forecast stock price movements, giving investors valuable insights. Machine learning models like LSTM, ARIMA, and SARIMA are widely used in this field, especially by hedge funds, trading firms, and fintech companies.

Anomaly Detection in Server Logs

Tech companies like Google, Facebook, and Tesla heavily rely on time series analysis to monitor server performance and detect anomalies in real time. For example, if server response times suddenly spike, it may indicate a hardware issue or cyberattack. Time series models like ARIMA and Random Forest can be used to forecast expected server behavior, and any deviation from the norm can be flagged as an anomaly.

Demand Forecasting in Retail

Retailers, especially during the holiday season, depend on accurate demand forecasts to avoid overstocking or stockouts. By analyzing historical sales data, retailers can predict future demand, optimize inventory management, and plan for sales promotions. Time series forecasting models like SARIMA and Prophet are commonly used for this purpose.

Energy Consumption Forecasting

Utility companies rely on time series analysis to predict energy demand based on historical consumption patterns. Accurate energy demand forecasts allow companies to optimize energy production and prevent blackouts. Machine learning models, combined with time series analysis, can even incorporate weather patterns, which significantly affect energy consumption.

Case Studies from FAANG and Tesla

-

Google: Uses time series models to optimize their cloud infrastructure, predicting server loads based on historical data.

-

Amazon: Leverages time series forecasting for demand prediction and inventory management across its global network of warehouses.

-

Tesla: Uses time series data from its fleet of vehicles to predict battery performance and schedule maintenance checks. This data is also critical for forecasting energy consumption in Tesla’s Powerwall systems.

These real-world examples highlight the importance of time series analysis in machine learning applications. Mastering time series models and understanding their use cases will make you a strong candidate in ML interviews at leading companies.

10 Most Frequently Asked Time Series Questions in FAANG, OpenAI, Tesla Interviews

During machine learning interviews at companies like FAANG, OpenAI, and Tesla, time series analysis is a common focus area. Below are 10 frequently asked time series questions, along with a brief explanation or approach to each.

-

How do you detect seasonality in a time series dataset?

-

Answer: Use autocorrelation plots or spectral analysis to identify recurring patterns. Seasonality will often manifest as peaks at regular intervals in the autocorrelation function (ACF).

-

-

Explain ARIMA and how you would choose parameters (p, d, q).

-

Answer: ARIMA stands for AutoRegressive Integrated Moving Average. The parameters p, d, q are selected using ACF and PACF plots. Typically, trial and error combined with grid search can help optimize these values.

-

-

What is the difference between a stationary and non-stationary time series?

-

Answer: A stationary series has constant statistical properties (mean, variance) over time, while a non-stationary series exhibits trends or seasonality. Differencing or detrending can make a non-stationary series stationary.

-

-

How would you handle missing data in a time series?

-

Answer: Use techniques such as forward fill, backward fill, or interpolation. For more advanced models, machine learning algorithms can be used to predict missing values based on surrounding data.

-

-

How do LSTMs improve time series forecasting over traditional methods?

-

Answer: LSTMs can capture long-term dependencies in the data and handle non-linear relationships, making them ideal for complex, non-linear time series datasets where ARIMA and other statistical models may fall short.

-

-

How would you forecast multiple time series simultaneously?

-

Answer: Multi-output models like vector autoregression (VAR) or using machine learning techniques where multiple time series are treated as features in a model can help. In LSTMs, multiple time series can be input as multivariate data.

-

-

Describe a time series anomaly detection approach.

-

Answer: Use models like ARIMA or machine learning models (e.g., Random Forest) to forecast expected values and detect anomalies by comparing the actual data with the forecast. Deviations beyond a threshold indicate anomalies.

-

-

How would you validate the accuracy of a time series model?

-

Answer: Use techniques like cross-validation, rolling forecasts, and error metrics such as RMSE (Root Mean Square Error), MAPE (Mean Absolute Percentage Error), and MAE (Mean Absolute Error) to evaluate model performance.

-

-

How do you decompose a time series, and why is it important?

-

Answer: Decompose a time series into trend, seasonality, and residuals using methods like classical decomposition or STL (Seasonal and Trend decomposition using Loess). This helps in understanding the underlying structure of the data and improving model accuracy.

-

-

Can you explain the difference between exponential smoothing and moving averages?

-

Answer: Both methods smooth time series data, but exponential smoothing assigns exponentially decreasing weights to older observations, while a simple moving average gives equal weight to all past data points within the window.

These questions are a good representation of the type of time series challenges that engineers face in interviews with companies like Google, Facebook, Tesla, and OpenAI. Being familiar with these questions and preparing comprehensive answers will improve your confidence during the interview.

How to Prepare for Time Series Questions in ML Interviews

Preparing for time series questions in machine learning interviews requires a combination of theory, practical implementation, and problem-solving skills. Here are some effective strategies to help you excel:

Practice with Real-World Datasets

Platforms like Kaggle and UCI Machine Learning Repository offer time series datasets that you can use for practice. Choose datasets that cover different industries—stock market data, weather data, retail sales—to get a well-rounded experience.

Understand the Theory Behind Models

Many interview questions will focus on the underlying mechanics of time series models like ARIMA, SARIMA, and LSTM. Make sure to understand how each model works, when to apply it, and how to tune its parameters. Review key concepts like stationarity, autocorrelation, and lag to deepen your theoretical knowledge.

Mock Interviews and Coding Practice

Practice coding time series models in Python using libraries like statsmodels, fbprophet, and tensorflow. Mock interviews, especially those offered by InterviewNode, can help simulate real interview conditions, allowing you to practice solving time series problems under time constraints.

Data-Driven Communication

In interviews, it’s not just about solving the problem; it’s about communicating your thought process clearly. Make sure you can explain how you would preprocess time series data, select a model, and evaluate its performance. Use data-driven examples to support your explanations.

InterviewNode’s Edge: How We Help You Prepare

InterviewNode specializes in helping software engineers and ML candidates excel in their technical interviews, especially in challenging topics like time series analysis. Here’s how InterviewNode can give you an edge:

-

Mock Interviews: Our platform offers mock interview sessions that simulate real-world ML interviews, with a focus on time series questions.

-

Tailored Feedback: After each session, you’ll receive detailed feedback on your performance, highlighting areas for improvement.

-

Exclusive Resources: We offer curated datasets, coding exercises, and walkthroughs to help you master time series algorithms.

-

Success Stories: Our clients have successfully landed roles at top tech companies, including FAANG and Tesla, thanks to our targeted preparation approach.

With InterviewNode, you’ll be well-prepared to tackle time series questions and showcase your skills during ML interviews.

Mastering time series analysis is essential for anyone preparing for machine learning interviews at top tech companies. From understanding the fundamentals of time series data to diving deep into advanced models like ARIMA, SARIMA, and LSTM, being well-versed in these topics will set you apart from other candidates.

To succeed, practice with real-world datasets, get hands-on experience with different models, and make use of resources like InterviewNode’s mock interview sessions. With a solid preparation strategy, you’ll be well-equipped to ace any time series question that comes your way.

Unlock Your Dream Job with Interview Node

Transitioning into Machine Learning takes more than just curiosity, it takes the right guidance. Join our free webinar designed for software engineers who want to learn ML from the ground up, gain real-world skills, and prepare confidently for top-tier ML roles

Tailored for Senior Engineers

Specifically designed for software engineers with 5+ years of experience, we build on your existing skills to fast-track your transition.

Interview-First Curriculum

No fluff. Every topic, project, and mock interview is focused on what gets you hired at top teams in companies like Google, OpenAI, and Meta

Personalized Mentorship & Feedback

Weekly live sessions, 1:1 guidance, and brutally honest mock interviews from industry veterans who've been on both sides of the table.

Outcome-Based Support

We don’t stop at prep. From referrals to resume reviews and strategy, we’re with you till you land the offer and beyond