1. Introduction

Recommendation systems have become an integral part of our digital lives. Whether it’s Netflix suggesting a movie, Amazon recommending products, or Spotify curating a playlist, these systems guide users to relevant content based on their preferences. For software engineers, particularly those aspiring to work in machine learning (ML) or data science roles at top-tier companies like FAANG (Facebook, Amazon, Apple, Netflix, Google), understanding how recommendation systems work is not just useful—it’s essential.

Interviews at these companies often focus on key machine learning concepts, and recommendation systems are a favorite subject. Mastering the knowledge and problem-solving techniques behind recommendation engines can set you apart in competitive ML interviews. This blog will dive deep into what it takes to crack interviews focused on recommendation systems, equipping you with the knowledge, techniques, and practical tips to help you land your dream job.

2. Understanding Recommendation Systems

What Are Recommendation Systems?

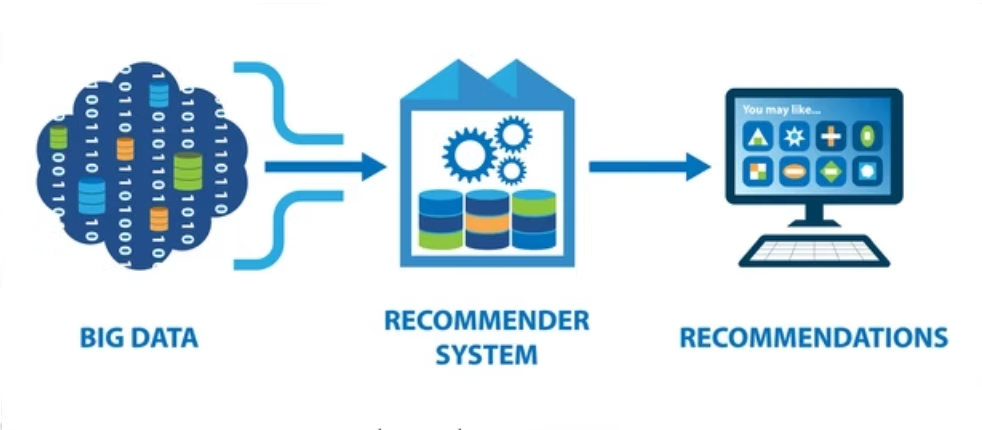

Recommendation systems (RS) are algorithms designed to suggest products, content, or services to users based on patterns, preferences, and interactions. They aim to deliver personalized recommendations that improve user experience, engagement, and conversion rates, and are pivotal in industries like e-commerce, streaming services, and social media.

Recommendation systems are an essential part of platforms like Amazon, Netflix, and YouTube, where users expect personalized suggestions. This ability to provide recommendations at scale, often with vast datasets, makes recommendation systems a crucial skill for software engineers, data scientists, and machine learning engineers.

Types of Recommendation Systems

There are three primary types of recommendation systems, each with distinct methods and advantages:

1. Collaborative Filtering

Collaborative filtering relies on the collective preferences of a group of users to make recommendations. It operates under the assumption that if User A and User B have similar preferences, User A’s highly rated items might be relevant to User B as well. Collaborative filtering is often divided into two main approaches:

-

User-User Collaborative Filtering: This method finds similarities between users based on their behavior (e.g., purchases, views, likes) and recommends items that similar users have interacted with. It can struggle with scalability because it requires comparing every user with every other user, which becomes computationally expensive as the number of users grows.

-

Item-Item Collaborative Filtering: Instead of focusing on users, item-item collaborative filtering compares items. If a user likes an item, the system recommends similar items. For example, if you purchase a laptop on Amazon, item-item collaborative filtering might suggest related accessories such as a laptop sleeve or a mouse. This approach is more scalable than user-user collaborative filtering, especially in systems with large numbers of users but fewer items.

-

Matrix Factorization: This is an advanced method of collaborative filtering that overcomes the limitations of traditional algorithms by breaking down large matrices of user-item interactions into smaller matrices that capture latent factors. For example, user preferences and item characteristics are represented as vectors in a lower-dimensional space, making it easier to compute similarities and generate recommendations. Matrix factorization techniques, such as Singular Value Decomposition (SVD) and Alternating Least Squares (ALS), are commonly used for this purpose.

2. Content-Based Filtering

Content-based filtering recommends items by analyzing the features of items themselves. For example, if you like a movie with certain attributes (e.g., genre, actors, director), the system will recommend other movies with similar attributes. This technique works well for new users or items because it doesn’t rely on historical user behavior to make recommendations. It can also handle the “cold start” problem better than collaborative filtering because it focuses on item metadata.

However, content-based systems have limitations. They often recommend items that are too similar to what the user has already interacted with, which can reduce the diversity of recommendations. Moreover, the system must be able to accurately extract and process item features, which can be challenging for complex items like videos or music.

3. Hybrid Models

Hybrid recommendation systems combine collaborative filtering and content-based filtering to deliver more accurate and diverse recommendations. They overcome the shortcomings of individual models by using collaborative filtering to identify user preferences and content-based filtering to analyze item attributes.

For example, Netflix uses a hybrid model that combines user viewing habits (collaborative filtering) with metadata about shows (content-based filtering) to recommend new movies or TV shows. Hybrid models can also reduce the cold start problem by using content-based techniques for new items and collaborative filtering for users with extensive histories.

Use Cases of Recommendation Systems

-

Amazon: Amazon’s recommendation engine is known for its effectiveness in driving product discovery. It uses item-item collaborative filtering to suggest items that other users with similar purchasing habits have bought. For example, if a customer buys a laptop, Amazon might recommend a laptop bag or a mouse based on the purchasing behavior of similar users.

-

Netflix: Netflix’s recommendation system uses a combination of collaborative filtering, content-based methods, and deep learning. It analyzes your viewing history, ratings, and behaviors to recommend movies and TV shows that you’re likely to enjoy. It also looks at what similar users have watched, creating personalized recommendations that help retain users.

-

Spotify: Spotify uses a hybrid recommendation engine that combines collaborative filtering and Natural Language Processing (NLP) techniques to analyze song lyrics, moods, and genres. This allows Spotify to recommend songs that align with a user’s taste, whether through direct similarity or contextual analysis of music features.

Understanding these real-world applications of recommendation systems not only helps prepare for interviews but also provides valuable context for building scalable, high-performance systems.

3. Key Concepts to Master for Interviews

To excel in interviews at companies like FAANG, it’s critical to understand both the theoretical concepts and practical applications behind recommendation systems. Below are the five key concepts you must master:

1. Matrix Factorization

Matrix factorization is a core technique in collaborative filtering that reduces a high-dimensional user-item interaction matrix into two lower-dimensional matrices. It helps uncover latent factors that explain user behavior and item characteristics, allowing for better prediction of user preferences. By capturing these latent factors, matrix factorization can generalize better to unseen data, which is especially valuable when user-item interaction data is sparse.

Example:In a movie recommendation system, users and movies can be represented by two separate matrices. Each user’s preferences and each movie’s attributes are embedded in a lower-dimensional space. The system learns these latent factors, such as users preferring certain genres or actors, and then predicts the user’s rating for a movie they haven’t seen.

-

Study Singular Value Decomposition (SVD) and Alternating Least Squares (ALS) algorithms, which are common matrix factorization techniques.

-

Explore practical applications using libraries such as Scikit-learn and TensorFlow, where you can implement matrix factorization models and fine-tune them for performance.

2. Embeddings (Word2Vec, Item2Vec)

Embeddings are dense vector representations of data items (e.g., words, products) that capture their relationships in a lower-dimensional space. In recommendation systems, embeddings are often used to represent both users and items. These vector representations help to uncover subtle patterns and similarities that can be missed by more traditional methods.

Example:In an e-commerce recommendation system, Item2Vec embeddings can represent products such that items frequently purchased together are placed near each other in the embedding space. This allows the system to recommend related products based on previous interactions.

-

Focus on learning embedding techniques such as Word2Vec for text-based items or Item2Vec for general items. Practice by using libraries like Gensim to generate embeddings.

-

Understand how embeddings reduce the dimensionality of the data while preserving relationships between items, which can improve both the accuracy and speed of recommendation systems.

3. Cold Start Problem

The cold start problem occurs when a recommendation system struggles to provide accurate suggestions for new users or new items due to a lack of interaction history. This is a common challenge in collaborative filtering because it relies on past user behavior.

Strategies to Overcome Cold Start:

-

Content-Based Recommendations: For new users or items, content-based methods can be used to provide recommendations based on item features or user preferences (e.g., movie genres or product descriptions).

-

Hybrid Models: Combining collaborative filtering with content-based techniques can help alleviate cold start issues. For example, while collaborative filtering waits for more user interactions, content-based recommendations can offer initial suggestions based on item metadata.

Interview Prep:Expect interviewers to ask about how you would handle the cold start problem in various contexts. You might be tasked with designing a system for a startup with limited user data, where you would need to rely more on content-based or hybrid approaches.

4. Evaluation Metrics

Evaluating the performance of a recommendation system is crucial to understanding how well it meets user needs. Different metrics focus on different aspects of system performance:

-

Precision: Measures the proportion of recommended items that are relevant. High precision means that most of the recommended items are of interest to the user.

-

Recall: Reflects the proportion of relevant items that are recommended out of all relevant items available. High recall means the system captures most of the items that the user would have liked.

-

F1-Score: A harmonic mean of precision and recall, providing a balanced view of the system’s performance.

-

NDCG (Normalized Discounted Cumulative Gain): Measures the quality of the ranking of recommended items. This is especially important for systems like Netflix or YouTube, where the order of recommendations can influence user engagement.

Interview Tip:Be prepared to discuss which metric you would use in different scenarios. For example, if a company prioritizes user satisfaction, you might focus on precision. If the goal is to maximize user engagement or retention, recall might be more important. Familiarize yourself with the trade-offs between these metrics and how they apply to real-world use cases.

5. Scalability Challenges

As recommendation systems grow, especially in large-scale applications like Amazon or Netflix, the system must be scalable enough to handle millions of users and items without significant performance degradation.

-

Data Volume: Storing and processing vast amounts of user interaction data in real-time requires efficient algorithms and infrastructure.

-

Latency: The recommendation engine must generate suggestions quickly, often in milliseconds, to provide a seamless user experience.

-

Computational Complexity: As the number of users and items increases, the system’s algorithms must maintain performance while keeping the computational cost low.

Techniques for Scalability:

-

Matrix Factorization: Using matrix factorization methods like SVD helps reduce dimensionality, which makes large-scale data easier to process.

-

Distributed Systems: Distributed computing frameworks like Apache Spark or Hadoop are often employed to handle massive datasets in parallel, reducing the time required to train models or generate recommendations.

Interview Focus:You might be asked how you would optimize an algorithm to scale with data size or user growth. Be prepared to discuss strategies for distributing computation across clusters and minimizing computational costs through algorithmic optimizations.

4. Common Algorithms Used in Recommendation Systems

Collaborative Filtering

Collaborative filtering is one of the most widely used techniques in recommendation systems, due to its ability to discover user preferences based on the behavior of similar users. It’s often seen in social media platforms, e-commerce, and streaming services.

User-User Collaborative Filtering

User-user collaborative filtering predicts user preferences by finding other users with similar tastes. For instance, if User A and User B both like several of the same movies, user-user collaborative filtering will suggest movies that User A has watched but User B has not. This method requires comparing users’ past interactions with the system to find relevant items for recommendation.

Item-Item Collaborative Filtering

Item-item collaborative filtering is more scalable than user-user filtering because it reduces the complexity of comparing individual users. Instead, it compares items based on user interactions, so when a user interacts with an item, the system recommends other similar items.

For example, if a user watches a specific movie, item-item collaborative filtering can recommend movies that are frequently watched together or have been similarly rated by other users.

-

Still requires extensive computational resources for large datasets.

-

May recommend overly similar items, reducing the diversity of suggestions.

Matrix Factorization

Matrix factorization transforms collaborative filtering by decomposing the user-item interaction matrix into smaller matrices that capture latent factors underlying user preferences and item characteristics. By projecting both users and items into a shared latent space, matrix factorization can make predictions about how much a user will like an item.

-

Handles sparse datasets efficiently, where many items have no explicit ratings or interactions.

-

Captures more complex relationships between users and items than traditional collaborative filtering.

-

Requires significant computational power, especially for large-scale systems.

-

Sensitive to the choice of hyperparameters, which requires tuning for optimal performance.

Content-Based Filtering

Content-based filtering analyzes the features of items (such as metadata) and recommends items with similar features. This method is widely used in applications like recommending articles, books, or songs based on content properties.

Example:A news platform might recommend articles on politics to a user who frequently reads articles tagged with “politics.” The system looks at attributes like the article’s subject, author, or source to determine relevance.

-

Works well for new items or users, as it doesn’t require extensive interaction history.

-

More transparent than collaborative filtering because the system’s recommendations can be explained by item features.

-

Limited diversity of recommendations. The system often recommends items that are too similar to what the user has already interacted with.

-

Requires well-structured and comprehensive item metadata, which can be difficult to maintain in certain domains (e.g., multimedia content like music or video).

Deep Learning in Recommendations

With the advent of deep learning, recommendation systems have become even more sophisticated. Deep learning models can handle unstructured data like images, videos, and text, which traditional models cannot.

Neural Collaborative Filtering

Neural collaborative filtering (NCF) uses deep neural networks to model complex user-item interactions. Instead of relying on simple similarity measures like cosine similarity or Pearson correlation, NCF learns high-dimensional representations of users and items and computes their interactions via a neural network.

Example:YouTube’s recommendation system uses neural collaborative filtering to analyze a wide range of factors, from user viewing history to video metadata, in order to recommend videos. The system can adapt to changes in user behavior over time, offering personalized content that evolves with the user’s preferences.

-

Can model non-linear and complex relationships between users and items.

-

Adaptable to multiple types of input data, including images, text, and audio.

Hybrid Systems

Hybrid systems combine collaborative filtering and content-based filtering to provide the best of both worlds. This allows systems to generate accurate recommendations even for new users or items by leveraging both user behavior and item metadata.

Example:Spotify uses a hybrid model that combines collaborative filtering with content-based filtering. Collaborative filtering helps recommend songs based on user listening behavior, while content-based filtering analyzes song features like tempo and genre to make more diverse recommendations.

-

More complex to implement and optimize, as it requires balancing both approaches.

-

May still require a significant amount of user interaction data to be fully effective.

5. Case Study: How Top Companies Implement Recommendation Systems

Netflix

Netflix’s recommendation system is a prime example of how collaborative filtering has evolved into a sophisticated hybrid model. Early on, Netflix’s system relied heavily on collaborative filtering techniques, but over time, it became apparent that combining multiple approaches was necessary for higher accuracy and user satisfaction.

Netflix has made its recommendation system famous through the “Netflix Prize,” which offered $1 million to any team that could improve their algorithm’s performance by 10%. The winning algorithm, which utilized matrix factorization and ensemble methods, spurred further development in recommendation system research.

Today, Netflix uses a combination of collaborative filtering, content-based techniques, and deep learning to personalize content recommendations. The system considers not only user viewing history but also global viewing trends, genre preferences, and even the timing of content consumption.

Interview Focus:Netflix typically asks candidates how they would approach building scalable, accurate recommendation systems. Expect questions about handling sparse datasets, cold start problems, and scalability challenges, given Netflix’s global scale.

Amazon

Amazon’s recommendation system is one of the most powerful and influential systems in e-commerce. At its core, Amazon uses item-item collaborative filtering to analyze product interactions. This approach allows Amazon to recommend related products based on customer purchasing history, browsing patterns, and even wishlist behavior.

Amazon’s recommendation system is critical for driving product discovery, cross-selling, and upselling. With millions of products in its catalog and billions of customers worldwide, scalability is a key concern for Amazon. The system must provide real-time, personalized recommendations while processing vast amounts of data.

Interview Focus:Amazon typically focuses on scalability in their recommendation system interview questions. Be prepared to discuss how you would optimize algorithms to handle massive datasets and how you would design systems that offer low-latency recommendations.

Spotify

Spotify’s recommendation engine is a standout example of how to combine collaborative filtering with content-based filtering to create a personalized user experience. Spotify uses collaborative filtering to analyze listening patterns and recommend songs, albums, or artists that other similar users have liked. On the content-based side, Spotify’s algorithm analyzes song features, such as tempo, genre, and mood, to recommend music based on the characteristics of songs you’ve listened to.

Spotify also uses Natural Language Processing (NLP) to analyze song lyrics and recommend songs based on themes or topics. This makes their recommendation engine capable of delivering personalized suggestions even when user interaction data is sparse.

Interview Focus:Spotify focuses on hybrid recommendation models in their interviews. You can expect questions about combining collaborative filtering with content-based methods to create a more dynamic recommendation system. Be ready to discuss NLP-based approaches for processing unstructured data like song lyrics.

6. Top 5 Most Frequently Asked Questions at FAANG Companies

Question 1: Describe how collaborative filtering works.

Answer: Collaborative filtering leverages user-item interactions to make recommendations. There are two types:

-

User-User: Finds similar users based on their preferences and recommends items.

-

Item-Item: Identifies similarities between items and suggests items similar to those a user has interacted with. Matrix factorization is often used to reduce dimensionality and improve accuracy.

Question 2: How do you handle the cold start problem in recommendation systems?

Answer: The cold start problem can be tackled using:

-

Content-Based Recommendations: Use item metadata (e.g., product descriptions) for recommendations.

-

Hybrid Systems: Combine collaborative filtering and content-based methods to mitigate the lack of data.

Question 3: Explain the evaluation metrics you’d use to assess the performance of a recommendation engine.

Answer: Key metrics include:

-

Precision: Measures how many recommended items are relevant.

-

Recall: Looks at how many relevant items were recommended.

-

NDCG: Focuses on the ranking of relevant items. You should choose the metric based on the use case—whether the goal is relevance, ranking, or coverage.

Question 4: How would you scale a recommendation system to handle millions of users?

Answer: To scale, consider:

-

Matrix Factorization: Reduces dimensionality and speeds up recommendations.

-

Embeddings: Helps store and process high-dimensional data efficiently.

-

Distributed Systems: Use technologies like Apache Spark or Hadoop for data processing at scale.

Question 5: Can you explain a hybrid recommendation system and why companies use it?

Answer: A hybrid system combines collaborative filtering and content-based filtering. This offers higher accuracy by leveraging user behavior and item metadata, overcoming limitations of using either method alone.

7. Cracking the Interview: Tips and Strategies

Common Interview Questions

Prepare for questions like:

-

“How would you build a recommendation system for a new user?”

-

“Explain matrix factorization in the context of recommendation systems.”

-

“How would you evaluate a recommendation system’s performance?”

How to Approach the Interview

-

Mock Interviews: Practice is key. Platforms like InterviewNode can help you simulate real interview scenarios.

-

Explain the Intuition: Focus on explaining the intuition behind algorithms. Interviewers value clear communication of ideas.

-

Problem-Solving: Break complex problems into smaller, manageable parts.

Must-Know Resources

-

Books: Recommender Systems Handbook is a valuable resource for understanding algorithms and implementation techniques.

-

Courses: Online platforms like Coursera offer courses on recommendation systems.

-

Research Papers: The “Netflix Prize” papers are a great starting point to explore advanced recommendation algorithms.

8. Conclusion

Recommendation systems are at the core of modern ML interviews at companies like Netflix, Amazon, and Google. By mastering the key concepts, algorithms, and strategies covered in this blog, you’ll be well-prepared to ace any recommendation system interview. The field is dynamic, but with the right preparation, you can stand out and showcase your ability to build scalable, accurate systems.

Start your journey today with InterviewNode’s resources, including mock interviews and tutorials, to ensure you’re ready for your next big ML interview challenge. Mastering recommendation systems can open doors to some of the most exciting roles in the industry.

Tailored for Senior Engineers

Specifically designed for software engineers with 5+ years of experience, we build on your existing skills to fast-track your transition.

Interview-First Curriculum

No fluff. Every topic, project, and mock interview is focused on what gets you hired at top teams in companies like Google, OpenAI, and Meta

Personalized Mentorship & Feedback

Weekly live sessions, 1:1 guidance, and brutally honest mock interviews from industry veterans who've been on both sides of the table.

Outcome-Based Support

We don’t stop at prep. From referrals to resume reviews and strategy, we’re with you till you land the offer and beyond